AI Security Awareness

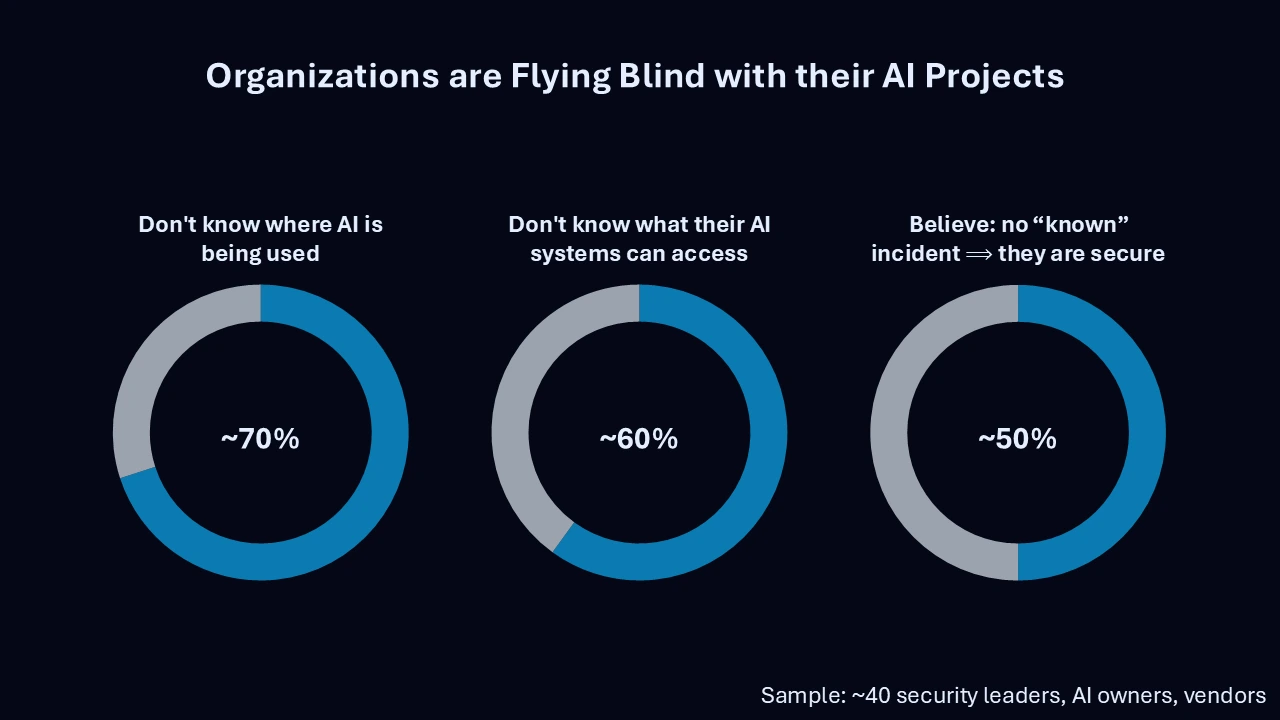

Over the last few months, we’ve spoken with ~40 security leaders and AI owners across dozens of organizations – banks, SaaS companies, large enterprises, start-ups. We have also spoken to multiple vendors who support them.

A couple of patterns are clear:

- There is confidence around AI adoption.

- There is very little visibility into AI risk.

From our aggregated conversations:

- ~70% of organizations using internal copilots or GenAI tools cannot clearly articulate where AI is being used across teams (shadow AI is assumed, not measured).

- ~60% admit they don’t have a clear answer to what data their AI systems can actually access at runtime.

- ~50% believe they are “reasonably secure”, primarily because no incident has surfaced yet. This is crucial because AI systems don’t fail like traditional software. They don’t raise obvious alarms. They fail plausibly.

These are clear symptoms of flying blind – not knowing which AI systems exist, what they can access, what actions they can take, and, critically, when they cross an acceptable boundary.

Security teams feel this acutely. Many told us they are already overwhelmed. They are reviewing AI projects manually, days & weeks after deployment. Ironically, AI-driven incidents happen in minutes if not seconds.

The false choice organizations face

Organizations end up with a false choice:

- Move fast with AI and accept unknown risk

- Slow down innovation to feel safe

Both are losing strategies.

The enterprises that win won’t be the ones who “waited for regulations” or banned tools. They’ll be the ones who could see their AI risk clearly enough to move faster than everyone else.